Poster Award Recognitions

Undergraduate Best Poster

Thomas Joyce, University of Notre Dame

“Learning Analytics in Organic Chemistry”

Runner Up

Ashley Armelin, Ava DeCroix, Allison Skly, & Sarah Cullinan, University of Notre Dame & St. Mary’s College

“Leave them kids (data) alone: privacy concerns in assessment data”

Graduate Best Poster

Juan D. Pinto & Qianhui Liu, University of Illinois Urbana-Champaign

“Trustworthy AI requires algorithmic interpretability: Some takeaways from recent uses of eXplainable AI (XAI) in education”

Runner Up

Mengxia Yu, University of Notre Dame

“Towards Controllable Multiple-Choice Quiz Question Generation for STEM Subjects via Large Language Models”

Poster Abstracts

AI in Computer Science Education: A Systematic Review of Empirical Research in 2003-2023

Ke Zhang, Wayne State University

This poster will present a systemic review of artificial intelligence (AI) in computer science education (CSEd) published in 2003-2023. Following the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines, 20 empirical studies were selected from the Web of Science, ACM Digital Library, IEEE Xplore database, and specialized journals on AI in education. Multiple analyses were conducted in this review, including selected bibliometrics, content analysis, and categorical meta-trends analysis to explore the current state of research publications on AI for CSEd, and to pinpoint the specific AI Technology features and their CSEd benefits. More specifically, this review characterized the impact of AI in CSEd into cognitive (e.g., learning outcomes, test results, etc.) or affective (e.g., self-efficacy, motivation, satisfaction, learning interest, attitude, and engagement). The in-depth analysis also dived into the exact features and functions of AI technologies reported in these studies (i.e., chatbots, intelligent tutoring systems, and the adaptive and personalized recommendation system) and how they might be linked to the reported educational impact. Noticeably, this review has spotted a few serious gaps, including the lack of theoretical support from education, and the overlook of diversity, equity, and inclusion in AI for CSEd. Scaled, collaborative, interdisciplinary or even trans-disciplinary efforts are in necessary for AI research and development endeavors in CSEd. Based on the review, this poster will (1) present the synthesis and critique of the existing research literature on AI in CSEd published in 2003-2023, (2) highlight the trends of AI in CSEd research publications, (3) showcase AI technologies in CSEd, (4) explain the educational benefits of AI in CSEd, (5) specify the critical gaps in the research, development and implementation of AI for CSEd, and (6) stimulate and facilitate rich discussions on the practical implications and the future directions from a multitude of perspectives.

Future Advising with AI: Examing ChatGPT’s Role in Transforming Academic Advising

Julia Qian, University of Notre Dame

The goal of this project is to explore the potential of AI in academic advising, as well as the benefits it could bring to students, advisors, and universities. The objective of this session is to support administrators and advisors to understand how AI influences advising, and how does academic advisor’s role changes through the application of the ChatGPT. We will also discuss ethics issues and limitation regarding applying ChatGPT in academic advising. As practitioners, we understand current academic advising models sometimes can be inefficient and labor-intensive for advisors and universities, as well as time-consuming for students. AI has the potential to improve this process by automating certain aspects of academic advising and providing more personalized advice to students. This will allow advisors to shift their energy to connect with students on a personal level and share academic and holistic development strategies with students. Learning objectives: • Understand the capabilities of GPT models related to academic advising and various tools that may enhance the advising experience. • Examine advising practice in participations’ institution and understand the applications of GPT models in their daily advising operations. • Identify and develop strategies for developing and strengthening the use of GPT models in advising practices.

Leave them kids (data) alone: privacy concerns in assessment data

Nuno Moniz, University of Notre Dame

The right to privacy has been a discussion topic in computing since the late 60s. However, today, we face the pressing problem of data pervasiveness and the issues it raises for computation and society. Detailed information records on individuals – microdata – are often crucial for analysis and mining tasks in data-driven projects. In this setting, the privacy of data subjects is constantly challenged as data is re-used and analyzed on an unprecedented scale, raising concerns about how organizations and institutions handle their data and private information. Our work focuses on assessment data, specifically student performance and behavior, and the practical challenges that guaranteeing individual privacy poses for every stakeholder in the education ecosystem. After analyzing multiple assessment data sets that are publicly available, we confirm that 1) all have demonstrated issues with single-outs (uniquely identifiable individuals) even when using small sets of quasi-identifiers; 2) privacy risk ranges considerably from roughly 10% to 40%; and 3) even public data sets released with the application of privacy-preserving strategies show privacy concerns related to the number of single-outs. Our work provides a crucial contribution to the advancement of AI applications using assessment data. It highlights the critical importance of leveraging state-of-the-art privacy-preserving methods to ensure the individual privacy of students, particularly minors. In addition, it underscores the need (and urgency) to improve training and collaborations that would ensure privacy-safe assessment data to further our understanding of education, social/cultural/economic determinants of success, and the ensuing impact of interventions.

Towards Controllable Multiple-Choice Quiz Question Generation for STEM Subjects via Large Language Models

Mengxia Yu, University of Notre Dame

In the realm of STEM education, the post-lecture assessment is a crucial element that reinforces the learning objectives and gauges students’ understanding. The generation of multiple-choice questions (MCQs) as a formative assessment tool can be a tedious task for educators. Automated Question Generation (QG) systems have been studied, yet their utility is often constrained by the lack of control over the generated content. Our research aims to develop a QG system that allow control upon the lecturers over the characteristics of the generated MCQs, aligning them closely with the lecture’s core themes and desired difficulty levels. By utilizing the transcripts of video lectures as input, the proposed system aims to generate MCQs wherein the difficulty level, central content, knowledge dimension, and cognitive level are controllable as per the educator’s preferences. We construct a dataset comprising transcripts and MCQs collected from the TedEd learning platform. We design a set of control codes and evaluation metrics to measure the controllability of the QG system. We choose large language models (LLM) as base models, as LLM shows big potential to handle a variety of language tasks without additional training on human-annotated data. Various LLM prompting frameworks are experimented with to identify the most conducive approach for controlled MCQ generation. Preliminary findings indicate a promising direction towards achieving controllable QG systems, thereby empowering educators with a potent tool to design formative assessments that echo their pedagogical goals precisely. This initiative marks a step forward in alleviating the assessment design burden from educators while ensuring the quality and relevance of the quizzes.

Trustworthy AI requires algorithmic interpretability: Some takeaways from recent uses of eXplainable AI (XAI) in education

Juan D. Pinto, University of Illinois Urbana-Champaign

Amidst the current surge of excitement and skepticism surrounding AI, there is a pressing need to foster trust in educational AI models among both students (Putnam & Conati, 2019) and teachers (Nazaretsky et al., 2022). A pivotal aspect of building this trust lies in the ability to provide clear explanations for the decisions made by these complex models. While interpretability may not be a requirement of powerful AI, it serves as a foundational step towards engendering trust. For this reason, researchers in education have turned to the field of eXplainable AI (XAI) for insights on demystifying “black-box” models. Unfortunately, commonly used post-hoc techniques in XAI have shown inherent limitations, including a lack of agreement between techniques (Krishna et al., 2022), the risk of generating unjustified examples for counterfactual explanations (Laugel et al., 2019), and the “blind” assumptions that must be made when treating a model as a literal black box (Rudin, 2019). Yet based on our review of the literature, there appears to be a general lack of awareness among educational data scientists regarding these issues. Studies often use the explanations created by a single approach without questioning their fidelity to the model’s inner workings. Moreover, there is a tendency to use XAI as a simple mechanism for designing interventions under the assumption that the model captures causal relationships in the real world with fidelity. This can produce conclusions that are twice removed from reality. Recently, some researchers are bringing awareness of the disagreement problem to education (Swamy et al., 2023), while others are seeking ways to assimilate techniques for causal modeling from the social sciences (Cohausz, 2022). Yet ultimately, if the goal is to create explanations that can be faithful to the model and simultaneously instill trust, then researchers may need to forgo post-hoc approaches in favor of the more challenging task of designing AI models for education that are intrinsically interpretable. References Cohausz, L. (2022). When Probabilities Are Not Enough – A Framework for Causal Explanations of Student Success Models. Journal of Educational Data Mining, 14(3), 52–75. https://doi.org/10.5281/zenodo.7304800 Krishna, S., Han, T., Gu, A., Pombra, J., Jabbari, S., Wu, S., & Lakkaraju, H. (2022). The disagreement problem in explainable machine learning: A practitioner’s perspective (No. arXiv:2202.01602). arXiv. https://doi.org/10.48550/arXiv.2202.01602 Laugel, T., Lesot, M.-J., Marsala, C., Renard, X., & Detyniecki, M. (2019). The dangers of post-hoc interpretability: Unjustified counterfactual explanations (No. arXiv:1907.09294). arXiv. https://arxiv.org/abs/1907.09294 Nazaretsky, T., Cukurova, M., & Alexandron, G. (2022). An instrument for measuring teachers’ trust in ai-based educational technology. LAK22: 12th International Learning Analytics and Knowledge Conference, 56–66. https://doi.org/10.1145/3506860.3506866 Putnam, V., & Conati, C. (2019). Exploring the need for explainable artificial intelligence (XAI) in intelligent tutoring systems (ITS). IUI Workshops’19. Rudin, C. (2019). Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature Machine Intelligence, 1(5), 206–215. https://doi.org/10.1038/s42256-019-0048-x Swamy, V., Du, S., Marras, M., & Kaser, T. (2023). Trusting the explainers: Teacher validation of explainable artificial intelligence for course design. LAK23: 13th International Learning Analytics and Knowledge Conference, 345–356. https://doi.org/10.1145/3576050.3576147

A Comparison of the Efficiency of Two Image Processing Models on Identifying Students’ Written Solutions to Fraction Problems

Qihan Lu, Rutgers University

With AI advancements, new development of automated scoring systems is capable of interpreting and evaluating students’ written responses, accurately diagnosing error types in an efficient manner. However, reviewing young children’ handwritten solutions to pinpoint students’ error types and underlying misconceptions remains a complex task. The development of multimodal machine learning algorithms for simultaneous comprehension and extraction of information from textual and graphic elements is still fledgling, particularly in K12 education. We aimed to develop and test an AI model to identify 0-1 number lines in images of students’ hand-written fraction responses. The key elements include the number line image and multiple numericals. In this study, we compared two image processing AI tools, Yolov8 and Detectron2. The dataset used in our study contained 139k images of student responses on fraction problems with a 0-1 number line, which involve numerical, text, and graphic information. We manually labeled images as datasets for Yolov8 and Detectron2. So far, we have annotated 576 images for Yolov8 and 206 for Detectron2. 70% are used as training set and 30% as test set. Experimental results show that Yolov8 model has correctness of 89% and the training time is 5 minutes whereas Detectron2 model has correctness of 86% and the training time is 16 minutes. Our model development and comparison is still in progress, we anticipate to complete and report final results when presenting this work. Despite of AI platforms developed for the general image processing, existing research has rarely been applied to young children’s handwritten solutions, which represent unique challenges. This study, as an exploration of the image processing of elementary students’ hand-written mathematics solutions, shed light on the future research and practice of developing automated grading and diagnosing system for students’ mathematics problem solving.

Are Universities Ready for Generative Artificial Intelligence (GAI)? Topic Modeling and Sentiment Analysis of 100 Universities Policy Documents

Brian Waltman, The University of the Incarnate Word

Generative Artificial Intelligence (GAI), including ChatGPT and others, are making a profound impact on academia. Many universities across the United States and the world are struggling with how to incorporate this new technology into learning without compromising the importance of critical thinking and originality of ideas in students.

The purpose of this study is to determine if there are themes common to the universities involved and what the general sentiment is about the use of GAI. Accessing websites from 100 universities across the United States (N = 100), I have gathered documents including formal policies, guidelines for both instructors and students in proper usage, as well as articles providing various opinions on the use of GAI in academia (n = 200). The research questions are: What are the themes common across universities regarding GAI? What is the overall sentiment of GAI?

The method used to answer the research questions is Topic Model Analysis and Sentiment Analysis. Topic Model Analysis employs Latent Dirichlet Allocation (LDA) for analyzing text formatted data to uncover prevalent topics and sentiment analysis reveals the underlying emotions and attitudes. The preliminary outcomes of the topic model revealed five themes: AI Integration and Educational Transformation; Ethics, Integrity, and Policy in AI Education; AI’s Influence on Academic Skills and Faculty Development; Student Experience and Workforce Preparation; and Research, Innovation, and Future Directions. Overall, the sentiment across the documents tends to be slightly positive with moderate subjectivity, suggesting a generally positive but measured discussion regarding GAI in an academic context.

Enhancing Online Education: An AI Framework for Non-Verbal Communication Analysis and Collaborative Learning Enhancement

Mohammed Almutairi, University of Notre Dame

The transition to online education during the COVID-19 pandemic has significantly disrupted traditional pedagogical interactions, especially in terms of non-verbal communication, which plays an instrumental role in student engagement and the facilitation of collaborative learning. Non-verbal cues such as facial expressions, tone of voice, and body language are essential for the psychological underpinnings of rapport and empathy within the educational environment. These cues support the emotional and cognitive processes underlying learning by providing context, nuance, and immediacy to the instructional content. In the online setting, the absence of these cues can impede the natural flow of communication, potentially leading to a disconnection that can affect students’ mood, motivation, and their overall academic journey. Despite the recognized importance of non-verbal cues in mitigating online fatigue and enhancing student engagement, there remains a notable lack of effective implementation of AI tools capable of capturing and decoding these cues within virtual learning environments. Addressing this gap, our project introduces an AI framework that assesses student engagement and improve collaborative learning. It leverages the power of sentiment analysis to interpret emotions from written feedback and incorporates advanced facial expression detection to gain a comprehensive understanding of the students’ learning experiences. By integrating a recurrent neural network (RNN) with attention mechanisms, our model analyzes the context and sentiment of student feedback, while employing regularization to avoid overemphasis on irrelevant aspects. Concurrently, our use of convolutional neural networks (CNNs) effectively recognizes and interprets a wide array of emotional states from facial cues, thus offering a nuanced view of the unspoken dimensions of student interaction. To further refine the collaborative learning process, we have incorporated the Upper Confidence Bound (UCB) algorithm for strategic student team formation. This reinforcement learning strategy fine-tunes the balance between students’ preference for familiar teammates and the introduction of diverse team compositions. It achieves this by not only considering individual academic strengths but also integrating student preferences, thereby forming teams that are academically balanced and in tune with student interests. In conclusion, our AI framework provides a significant advancement in virtual learning, promising to bridge the gap in non-verbal communication and thereby enhancing the quality and effectiveness of online education.

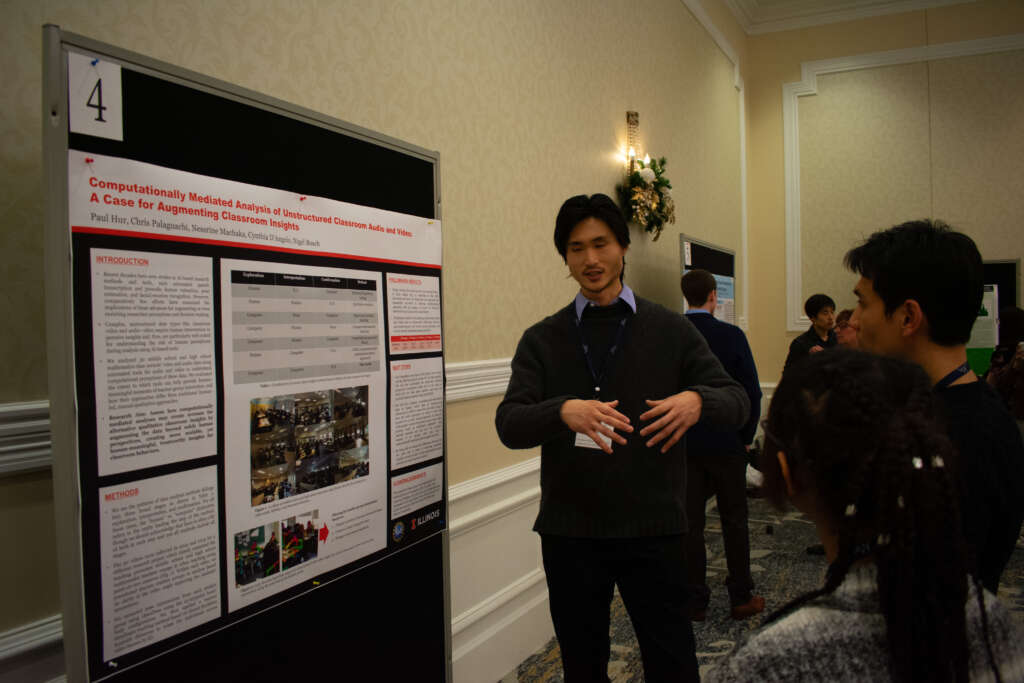

Computationally Mediated Analysis of Unstructured Classroom Audio and Video: A Case for Augmenting Classroom Insights

Paul Hur, University of Illinois at Urbana-Champaign

While recent decades in education research have seen strides in the development of AI-based methods and tools, comparatively few efforts have examined the implications of these computational advances for augmenting or even enriching human perceptions and decision-making. When dealing with complex, unstructured data such as classroom video and audio files, understanding the role of researcher perceptions during data analysis becomes particularly important as relying on solely computationally-dominant approaches (e.g., supervised/unsupervised machine learning, statistical hypothesis testing) may not be enough to best represent or interpret human behaviors in the data. In this work, we analyzed 30 middle school and high school mathematics class periods’ video and audio data using automated tools for audio (openSMILE and Whisper) and video (OpenPose) to understand computational perceptions of these data. We were motivated to understand how teacher–student interactions were unique in terms of audio (prosodic and semantic characteristics) and video (pose characteristics). In trials on smaller subsets of the whole data set, using pose data appears to be partially successful in filtering teacher–student interactions clips while potentially misleading in others. Given what we found through these approaches, our future plans are to compare computationally mediated vs. human-coded selection of teacher–student interaction video clips by qualitatively evaluating classroom insights through open coding. We situate our approach by arranging common data analysis methods in terms of the extent of human vs. machine involvement across what we see as common stages of data analysis: exploration, interpretation, and confirmation. Our work highlights how computationally mediated analyses can create avenues for alternative qualitative classroom insights by augmenting the data beyond solely human perspectives, creating more scalable, yet human-meaningful, trustworthy insights for classroom behaviors.

Modeling Student’s Learning Effectiveness through Large Language Model Simulations

Bang Nguyen, University of Notre Dame

The challenge of measuring and enhancing learning effectiveness is essential to the goals of many instructors. Through different means of assessments, including both direct approaches (such as exams, essays, course projects, etc,) and indirect strategies (such as student feedback, retention rates, course pathways, etc.), instructors strive to have a better understanding of the effectiveness of learning within the classroom. Based on these assessments, instructors then fine tune their curriculum to best support student learning. However, this comprehensive process demands significant time and resources, highlighting the need for the automation of certain aspects to improve efficiency. Our research, fitting into the Traditional Research track, delves into the use of Large Language Models (LLMs) for simulating students’ learning effectiveness. This novel approach leverages recent advancements in LLMs, found to be capable of mimicking human-like behaviors such as personalities, daily habits, and social interactions, to model students’ learning effectiveness. Our focus lies in the capacity of LLMs to model a range of factors contributing to students’ learning, including but not limited to course prerequisites, study hours, past exam scores, attendance and lecture content. This approach aims to provide instructors with actionable insights into how different curricula might influence students’ learning over time. To validate our LLM simulations, we employ psychometric methods. For instance, in a simulated setting where LLM-simulated students undertake standardized tests, we expect that their grade distribution will follow typical patterns (such as normal or skewed distribution, etc.). This method offers a promising avenue to assess the potential of LLMs in representing diverse levels of student learning effectiveness accurately. The implications of our research are significant. By employing LLM agents to role-play as students, we foresee a future where designing exams, learning materials, and lecture content can be more efficient and effective.

How Contexts Matter: Course-Level Correlates of Performance and Fairness Shift in Predictive Model Transfer

Joseph Olson, Teachers College Columbia University

Learning analytics research has highlighted that contexts matter for predictive models, but little research has explicated how contexts matter for models’ utility. Such insights are critical for real-world applications where predictive models are deployed across instructional and institutional contexts. Using administrative records and LMS behavioral traces from 37,089 students across 1,493 courses, we build predictive models trained on data from a single course and test on subsequent courses and provide a comprehensive evaluation of both performance and fairness shift of predictive models when transferred across different course contexts. We specifically quantify how model portability is moderated by differences in various course-level contextual factors such as subject matter, admin features, learning design features, and demographics. Our findings reveal that individual contextual variables distinctly impact the fairness and predictive performance of the models. Our results suggest that model transfer produces clear performance drops on average across different course pairs. Fairness shifts have less consistent directions yet are more predictable from contextual differences. We also show that performance and fairness shifts co-vary differently along dimensions of course-level contextual features. Not only are some groups of contextual features better predictors of one type of shift more than the other, but some specific features positively correlate with one type of shift while negatively correlating with the other. However, at the aggregate level, there is no clear trade-off between performance and fairness. Consequently, these insights suggest that systematically verifying predictive variables for both model performance and fairness might facilitate an optimal balance between the two. These findings have important implications for both researchers and practitioners. Researchers should not overlook the portability of fairness when studying distribution shifts. Learning engineers should be mindful of how different contextual features affect both performance and fairness portability when designing predictive models of student performance.

iSEA: Instructor-in-the-loop Student Engagement Analytics via FAccT AI

Wangda Zhu, University of Florida / College of Education

Artificial Intelligence (AI) techniques have become increasingly popular to analyze student engagement in online environments over the last decade; however, these approaches are still not widely adopted in practice by instructors, partly due to AI’s black box problem. Engagement analytics may fail to analyze the complexity of engagement construct in theory; and instructors may lack trust and control in techniques. To tackle these issues, we designed and developed the iSEA toolkit for engagement analytics that 1) involves instructors in the analytics design, 2) visualizes analytics process, and 3) applies fair AI algorithm for adjusting analytic results. We then conducted interviews with six instructors who had various online teaching experiences to understand their concerns with applying AI in engagement analytics and their experience on using iSEA. Participants showed diverse ways to analyze student engagement via iSEA and expressed how it improved their trust in engagement analytics. The results showcased an effective way to integrate instructors’ opinions, learning theory, and AI techniques together toward applying AI in real-world settings when analytics are more transparent. Poster’s content: a. figures: iSEA interface images, a study design diagram, a user journal diagram; b. texts: summary of instructions’ opinions on trust in engagement analytics and whether and how iSEA improves their trust. Objectives: The goal of this poster is to demonstrate an innovative and effective way to enhance instructors’ trust in AI to analyze student engagement via involving them in the loop of technique design process. Relevance: a. investigate why instructors not trusting AI in engagement analytics in real-world settings; b. present a tool for enhancing instructors’ trust via involving them in the techniques design; c. evaluate whether and how this tool enhance instructors’ trust.

Learning Analytics in Organic Chemistry

Thomas Joyce, University of Notre Dame

Supporting and enhancing student learning is one of the main objectives of learning analytics. Although current learning analytics practices can identify at-risk students and predict student performance, their implications are limited due to a narrow focus on helping a handful of lower performance students achieve predetermined instructional goals. In light of these issues, our project aimed to holistically analyze student learning performance in a foundational organic chemistry course at the University of Notre Dame by employing a performance group gap approach. We first classified students into Thriving, Succeeding, and Developing performance groups based on their final course grades. We then used weekly course assessment analysis and item analysis to identify the most significant discrepancies in performance between the groups on exams, quizzes, and specific topics and questions. We also analyzed the differences in study habits, stress levels, confidence, and preparedness between the performance groups using student survey data from midterm assessment wrappers. Lastly, we created interpretable statistical and machine learning models to predict students’ final exam grades and final performance groups at early course stages. Our poster will present the analytical results of this project through intuitive data visualizations, such as exam score timelines to highlight the differences in scores between each performance group and Sankey diagrams to display the changes in students’ study habits across midterm exams. Our figures will include descriptive captions to provide course instructors with practical methods of closing performance gaps in future course offerings. Overall, the findings of this project demonstrate an effective way to analyze student performance in foundational STEM courses that incorporates trustworthy AI algorithms for prediction and classification. Our analytical approach also guides instructors toward leveraging AI insights and technologies to improve student learning outcomes.

Assessing ChatGPT’s Proficiency in Generating Accurate Questions and Answers for Algebra 1

Meredith Sanders, University of Notre Dame

The rise of Artificial Intelligence (AI) technology has proved to be advantageous in many regards, especially within the field of education. Programs such as ChatGPT have allowed students and teachers alike to expand their understanding of different concepts by challenging them to think deeply about the material they are learning/teaching. Specifically, ChatGPT uses conversational dialogue to perform a variety of functions, which includes generating questions and solutions across multiple subjects. While this is groundbreaking, it is critical to assess the program’s accuracy first. The objective of this research was to investigate how ChatGPT generates questions and offers answers on topics related to Algebra 1. Topics included setting up equations, graphical transformations, and arithmetic sequences. In total, this project examined over 200 problems, which consisted of screenshot and latex versions of the questions and solutions. Each problem was categorized based on question difficulty and both versions of the questions and solutions were examined for errors. Question-related errors were classified as either incorrect answer choice, double answer choice, triple answer choice, unsolvable, or mismatched latex and screenshot, whereas solution-related errors were divided into the following categories: careless, procedural, computational, and explanatory. After identifying the issues ChatGPT encountered with questions and answers, we conducted an analysis using RStudio, primarily focusing on the questions as they represented the main source of error. The results showed that the most common issue ChatGPT faced was providing incorrect answer choices for the questions it generated. In addition, calculations for regression analysis demonstrate a strong and statistically significant correlation, indicating that the type of error made is directly related to the question’s level of difficulty. In conclusion, this study underscores the potential of AI in education but highlights the need for ongoing evaluation and refinement to ensure the accuracy and reliability of AI-powered educational tools.

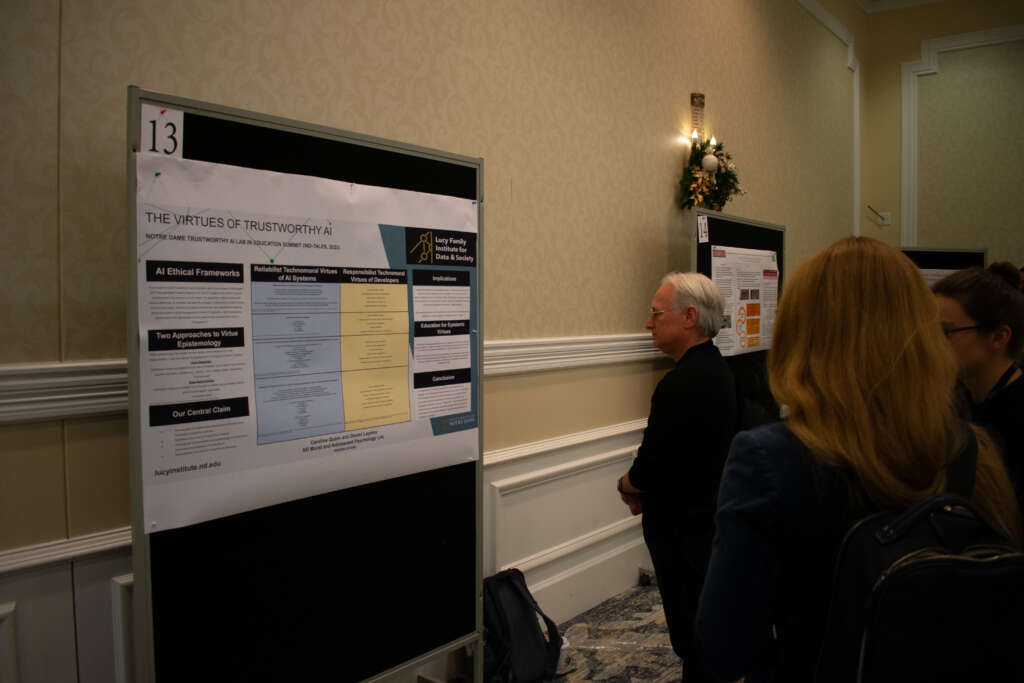

The Virtues of Trustworthy AI

Caroline Quinn, University of Notre Dame

The promise and challenge of Artificial Intelligence (AI) and Information and Communication Technologies (ICTs) has provoked widespread reflection on the nature of its interface with human users and its impact on human behavior. How much should we trust AI and what makes it trustworthy? Can virtuous machines operate on ethical principles, and if so, which principles are viable? These questions have generated significant literature that attempt to delineate AI design features that would seemingly cultivate our trust in it. The language of trust, however, and conditions of trustworthiness, along with recent interest in “technomoral virtues” (Vallor, 2016) raise ethical considerations that traditional ethical theory (deontology, utilitarianism, virtue ethics) do not easily address. However, we will argue that virtue epistemology offers a more promising way forward for understanding the trustworthy features of AI. There is a debate within virtue epistemology between the so-called reliabilist and responsibilist camps regarding the nature of intellectual virtues. Responsibilism takes an agent-based approach to intellectual virtues that attach to selfhood and character, traits for which we hold persons responsible. This could work for trustworthy AI if we abandon the language of character-selfhood but retain holding AI responsible. In contrast, the reliabilist approach describes faculty virtues — stable, reliable, faculties that lead reliability to well-attested knowledge. Put differently, reliabilism focuses on the dispositional competencies of subjects/entities that can be reliably counted on to gain desired outcomes (trust, well-attested knowledge). The difference then, is that reliabilism conceives intellectual virtues to be reliable faculties or competencies, while responsibilism views intellectual virtues as character virtues as understood in virtue theory. Although the reliabilist-responsibilist distinction is often breached in recent work in virtue epistemology (e.g., Fleicher, 2017), it is a good lens to appraise the nature of AI traits, and we will attempt to frame an integrative perspective.

Making A.I. Dance

Kathryn Regala, University of Notre Dame

This study investigates the innovative application of Large Language Models (LLMs) such as ChatGPT-4 and Claude 2 in the realm of dance choreography, a field traditionally not associated with language-based AI models. Recognizing the expansive capabilities of LLMs beyond written humanities, my research explores their potential in interpreting and creating dance. Utilizing the CRAFT method (Context, Role, Action, Format, Target) to guide the LLMs, I prompted them to choreograph a short dance to a 2007 popular song, within the scope of their training data. The models were tasked to self-assess their output based on specific criteria, including musical association, style, and creativity. I also evaluated the choreography based on my experience with dance choreography. The iterative process involved in this research, incorporating the Tree of Thought (ToT) prompting, led to the refinement of the models’ outputs. My findings indicate that ChatGPT-4 and Claude 2 can generate basic dance choreography, demonstrating an understanding of musical composition, dance styles, and the interplay between emotion, music, and movement. However, the resulting choreography lacked specificity, attributed to the limited written choreography data available to the models. This study underscores the potential of LLMs in creative fields like dance, suggesting that with further development and the integration of structured dance data, LLMs could offer more nuanced and detailed choreographic outputs. Further development of LLMs using structured dance data could enable richer applications in generating detailed creative movement.